Chapter 4: Universal Design

Introduction

Accessibility of digital resources was considered in Chapter 3. For most of the first decade of dedicated work in the field, the focus was on the work of the W3C Web Accessibility Initiative, with considerable optimism. WAI has continually engaged with experts from around the world to develop guidelines for resource content development, and authoring and access tools. That effort was originally directed towards what is called universal design. Unfortunately, universal design has not happened and this research it alone is not capable of delivering its promise.

In this Chapter, the history of the accessibility effort is

presented briefly before the guidelines are introduced. The details of the guidelines are not important in this context. What is of significance is the aim of the guidelines, and therefore the goal of universal design. The strategies for universal design depend on the separation of content from its presentation, based on pre-Web success with this methodology. The Disabilities Rights Commission (UK) conducted the first major evaluation of the effectiveness of the WAI guidelines and their use and produced some disappointing results. These are considered in detail. They have been replicated and commented upon by other observers.

The early-history of accessibility

The Web as a phenomenon and as separate from the Internet, only emerged in the 1990's, coming to the attention of even early adopters only by 1993 (W3C Web history, 2008). In 1994, in the abstract to "Document processing

based on architectural forms with ICADD as an example",

the International Committee for Accessible Document Design

(ICADD) was:

committed to making printed materials accessible to people

with print disabilities, eg. people who are blind, partially

sighted, or otherwise reading impaired. The initiative for

the establishment of ICADD was taken at the World Congress

of Technology in 1991. (Harbo

et al, 1994)

They noted:

This ambition presents a significant technological

challenge.

ICADD has identified the SGML standard as an important tool

in reaching their ambitious goals, and has designed a DTD that

supports production of both "traditional" documents

and of documents intended for people with print disabilities

(eg. in braille form, or in electronic forms that support speech

synthesis).

They referred to Standard Generalized Markup Language [SGML] that was used to markup presentations features for the printing of materials. Hypertext Markup Language [HTML], for the Web, was based on SGML.

After the first public meeting about the Web, WWW94, Dan Connolly (1994)

reported:

One interesting development is that right now, HTML is compatible

with disabled-access publishing techniques; i.e. blind people

can read HTML documents. We must be careful that we don't lose

this feature by adding too many visual presentation features

to HTML.

This was before the World Wide Web Consortium

was formed. Yuri Rubinski, an ICADD pioneer, was at that conference. He had been

involved in making sure that SGML could be used for other than

standard text representations and he and his colleagues did

not want their work to be lost in the context of the new technology,

the fast emerging Web.

Meanwhile, the World Wide Web Consortium [W3C] was being formed

with host offices in Boston, Tokyo and Sophie-Antipolis in

France. It came into existence in late 1994. A year later, at the fourth public meeting, WWW4 in Boston in December

1995, Mike Paciello, another ICADD pioneer, offered a workshop

called "Web

Accessibility for the Disabled".

Within a short

time, the American academies were working on what they were

calling at the time the National Information Infrastructure

[NII]. It was a time of great expectations for the new technologies.

In a report published in August 1997, the American National

Academies called for work to ensure that the new technologies

were accessible to everyone:

It is time to seek new paradigms for how people

and computers interact, the committee said. ... No single

solution will meet the needs of everyone, so a major research

effort is needed to give users multiple options for sending

and receiving information to and from a communication network.

The prospects are exciting because of recent advances in

several relevant technologies that will allow people to

use more technologies more easily.

.. the point remains that

we are still using a mouse to point and click. Although

a gloriously successful technology, pointing and clicking

is not the last word in interface technology.

.. New component

designs also should take into account the varied needs

of users. People with different physical and cognitive

capacities are obvious audiences, but others would benefit

as well. Communication devices that recognize users' voices

would help both the visually impaired as well as people

driving cars, for example. It is time to acknowledge that

usability can be improved for everyone, not just those

with special needs.

And later:

The report draws from a late 1996 workshop that convened

experts in computing and communications technology, the social

sciences, design, and special-needs populations such as people

with disabilities, low incomes or education, minorities,

and those who don't speak English. (National

Academies, 1997)

It should be noted that the steering committee included Gerhard Fischer and Gregg

Vanderheiden, both already champions of the need for accessibility of electronic

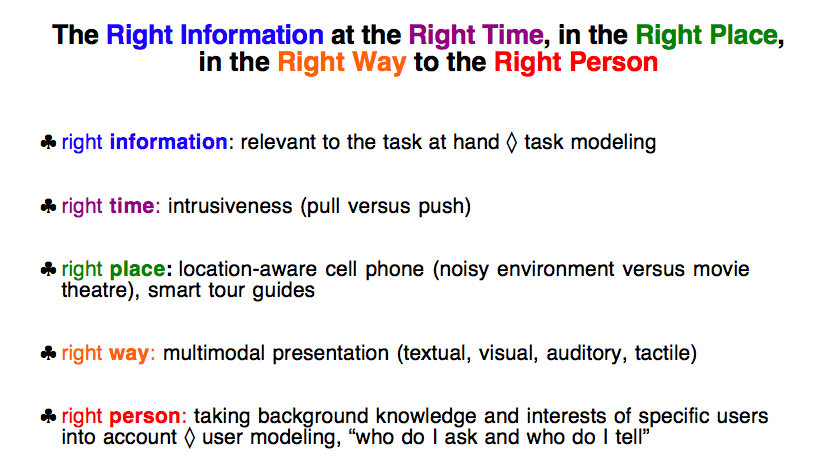

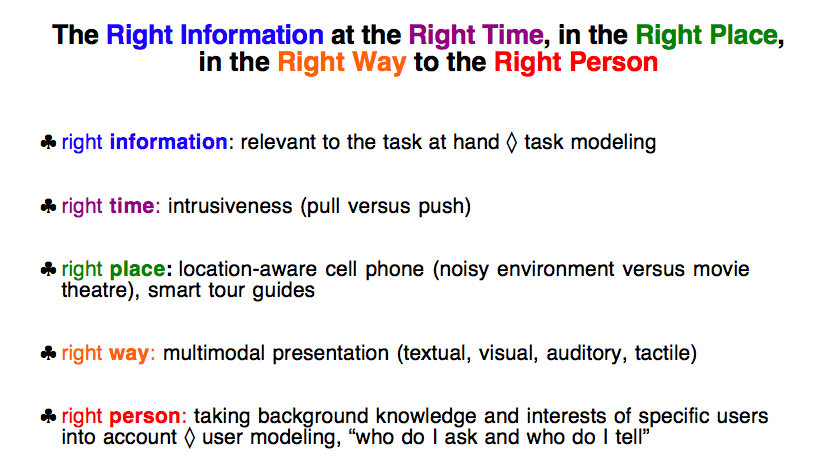

media. Fischer's slide of 1994 (Figure 23) shows the complexity of the problem:

Figure 23: The requirements for accessibility on the Web (Fischer, 1994)

Very soon after the report was released, in October 1997, the American National Science Foundation issued the following press release that would describe the scope of the new W3C Web Accessibility Initiative:

The National Science Foundation, with cooperation

from the Department of Education's National Institute for

Disability and Rehabilitation Research, has made a three-year,

$952,856 award to the World Wide Web Consortium's Web Accessibility

Initiative to ensure information on the Web is more widely

accessible to people with disabilities.

Information technology plays an increasingly important role

in nearly every part of our lives through its impact on work,

commerce, scientific and engineering research, education,

and social interactions. However, information technology

designed for the "typical" user may inadvertently create

barriers for people with disabilities, effectively excluding

them from education, employment and civic participation.

Approximately 500 to 750 million people worldwide have disabilities,

said Gary Strong, NSF program director for interactive systems.

The World Wide Web, fast becoming the "de facto" repository

of preference for on-line information, currently presents

many barriers for people with disabilities.

The World Wide Web Consortium (W3C), created in 1994 to develop common protocols

that enhance the interoperability and promote the evolution of the World Wide Web,

is working to ensure that this evolution removes -- rather than reinforces -- accessibility

barriers.

National Science Foundation and Department of Education grants will help create

an international program office which will coordinate five activities for Web accessibility:

data formats and protocols; guidelines for browsers, authoring tools and content

creators; rating and certification; research and advanced development; and educational

outreach. The office is also funded by the TIDE Programme under the European Commission,

by industry sponsorships and endorsed by disability organizations in a number of

countries.

I commend the National Science Foundation, the Department

of Education and the W3C for continuing their efforts to

make the World Wide Web accessible to people with disabilities," said

President Clinton. "The Web has

the potential to be one of technology's greatest creators

of opportunity -- bringing the resources of the world directly

to all people. But this can only be done if the Web is designed

in a way that enables everyone to use it. My administration

is committed to working with the W3C and its members to make

this innovative project a success". (NSF,

2007)

Things had moved very quickly behind the scenes. W3C had worked

through its academic staff to gain the NSF's support for the

project and politically manoeuvred the launch into the public

arena with the support of a newly appointed W3C Director and

the President of the US. (Interestingly, the US President's office contacted the author to see if the Australian Prime Minister (Howard at the time) would also like to endorse the grant. The Prime Minister's response declined the invitation and advised that this was not a priority. (Howard, 1997))

Sadly, Yuri Rabinsky died in 1995. Mike Paciello

was the Executive Director of the Yuri Rubinsky Insight Foundation

from 1996-1999, responsible for developing and launching

the Web Accessibility Initiative (Paciello ???). Gregg Vanderheiden became

the Co-Chair of the Web Content Accessibility Guidelines Working

Group, and Mike Paciello, long expected to have become the

director of the W3C WAI, went elsewhere when Judy Brewer

was appointed to that position.

Another significant player in this history was Jutta Treviranus.

She had been working with Yuri Rabinsky at the University

of Toronto and quickly emerged, with her colleague Jan Richards,

as an expert who could lead the development of guidelines

for the creation of good authoring tools.

At WWW6 in 1997, Treviranus argued that:

Due to the evolution of the computer user interface

and the digital document, users of screen readers face three

major unmet challenges:

- obtaining an overview

and determining the more specific structure of the document,

- orienting and moving

to desired sections of the document or interface, and

- obtaining translations of

graphically presented information (i.e., animation, video,

graphics

She further stated that:

These challenges can be addressed by modifying the

following:

- the access tool (i.e.,

screen reader, screen magnifier, Braille display),

- the browser,

- the authoring

tools, (e.g., HTML, SGML, plug-in, Java, VRML authoring

tools),

- the HTML specifications,

HTML extensions, Style Sheets,

- the individual documents, and

- the operating system. (Treviranus,

1997)

Treviranus was already the Chair of the Authoring Tools Accessibility

Working Group for W3C, and has been ever since. Clearly, the

principles of the ICADD developments were on their way into

the W3C guidelines.

With the appointment of Wendy Chisholm

as a staff member at W3C, the work of TRACE, her former employer

and the laboratory of Gregg Vanderheiden (co-chair of WCAG

Working Group), the Wisconsin-based researchers, contributed

significantly to W3C's WAI foundation. Judy Brewer, the Director

of W3C WAI, was not an expert in content

accessibility at the time but strong in disability advocacy.

The W3C guidelines were already crawling by the time they

were adopted by the W3C WAI.

Separation of Structure and Presentation

W3C WAI inherited, from ICADD's ISO 1280-3 and later standards,

the architecture of documents where a Document Terms Definition

[DTD] was used to describe the structure of the document. This was done using

a common language that could be mapped to

a common terminology. There could be any number of styles applied to those structural

objects by a designer. Presentation

could, and should, be separated from content, as the slogan

goes.

ICADD is aware that it is unrealistic to expect document producers and publishers

to use the ICADD DTD directly for production and storage. Instead a "document architecture" has

been developed that permits relatively easy conversion of SGML documents in practically

any DTD to documents that conform to the ICADD DTD for easy production of accessible

versions of the documents. (Harbo

et al, 1994)

This is important for its explanation of how, given an architecture

for markup, a single application can be used to read the markup and present the content

in different ways according to instructions about how to present each type of content.

It was already the state of the art in 1994.

The article further explains:

Still, the approach chosen by ICADD does seem to be a good

one, despite its lack of full generality. The problem that

ICADD faces is not only technical, it is also political and

organisational. Improving access through the use of the ICADD

intermediate format will only happen if information owners

and publishers choose to support it; ICADD depends on the DTD

developers to specify the mapping onto the ICADD tag set. By

using architectural forms for the specification, ICADD reduces

the perceived complexity of specification development; and the

same time this development - by having the specification be

physically part of the DTD - it is stipulated to be an integrated

part of the DTD development itself, thus presumably increasing

the chances of support from the DTD developers. (Harbo

et al, 1994)

What they said of ICADD seems to have accurately predicted

what would happen to Web content markup in the next decade.

What is now obvious, is that the influence of the early solutions

and players was going to prove dominant. Maybe the SGML

solutions were too easily taken for granted, as we can now see them as possibly a constraint on other ways of thinking about the emerging problem.

More media, same accessibility

It was but a short step to take the ICADD architecture

into the Web world, as happened with the introduction of styles,

machine-readable specifications for the presentation of structural

elements in a Web page. Hypertext MarkUp Language [HTML] was

the same kind of language as SGML although far simpler and,

like SGML, referred to a DTD. The progress

from the early use of computers to the Web introduced extensive use of multimedia, particularly

graphics. HTML needed to be adjusted with element attributes

that would stem the flow from inaccessibility back towards

some kind of accessibility. The challenge became not one of

maintaining the mono-media qualities, which had the qualities

Connelly noted, but finding ways to support the proliferation

of media without compromising the accessibility.

A simple example is provided by the tag that shows where the

inclusion of an image is required. The <img> tag included

an attribute that would provide those who could not see the

image with some idea of what it contained. Adding the <alt>

attribute achieved this. Later, adding a new document element

to be known as the <long desc>

went further to provide for a full description of the image.

The big idea was that the HTML DTD would specify the structural elements that should

be used and the content would be interpreted, according to the provided styles,

by the user agent, or 'browser' as it came to be known. What went wrong was that

the browser developers were able to exploit this new technology to their advantage. By competitively offering browsers that could do more than any other, the browser

developers constantly fragmented the standard. They offered both new

elements and new ways of using them. The browser battles continue although a decade

later, for a variety of reasons, some browsers are appearing that adhere to the current

standards.

The WAI Requirements

As the Web gained popularity, it acquired more and more users

for whom it was inaccessible. As Tim Berners-Lee pointed out

in an early presentation of the Web (Connolly,

1994), it had gone

from being the communication medium for a lot of geeks who

were content with text to a mass-medium and in the process

lost some of its most endearing qualities, including the equity

of participation that characterised the early Web.

The jointly-funded W3C WAI was chartered

to:

create an international program office which will coordinate

five activities for Web accessibility:

- data formats and protocols;

- guidelines for browsers, authoring

tools and content creators;

- rating and certification;

- research and advanced development;

and

- educational outreach. (NSF,

1997)

WAI was positioned,

then, to receive supplications from all sorts of users who were

finding the Web inaccessible or people acting on their behalf.

As an open activity, anyone could (and can) join the WAI Interest

Group mailing list and voice their opinion. This has been happening

for more than ten years and the list of problems is very long.

In that time, many obvious problems were identified early and

the more difficult ones, such as the problems

for people with dyslexia and dysnumeria, have emerged more

recently. Many complaints have been repeated. They are generally classified

into three types: problems to do with content, user agents

and authoring tools and so are channeled towards the three working

groups responsible for those areas.

The Working Groups are more focused than the Interest Group

and now have charters describing their goals, processes and

achievement points that help them prepare a recommendation

for the Director of the W3C. Essentially, what they do is gather

requirements and describe those requirements in generic terminology,

aiming to make their recommendations vendor and technology

independent and future proof.

The Working

Groups consist of experts who do what experts do, generalise

and specialise. One might say, then, that the WAI

Working Groups are chartered to determine the relevant specialisations

for consideration and to generalise from them to define guidelines

for accessibility. The guidelines serve a number of purposes but a clear

and specific use of them is to ensure that all W3C recommended "data

formats and protocols" contribute to accessibility.

The

W3C recommended WAI guidelines have assumed the role of data format and

protocol standards. They have been promoted to content creators in their

raw form. This has required a considerable support effort and generally, as predicted by ICADD (Harbo et al, 1994), has not been successful (see below).

WAI Compliance and Conformance

W3C is a technical standards organisation and their work is

devoted to technical specifications. Whereas another type

of organisation concerned about accessibility might have worked

on developer practices, and what practices should be encouraged

within the industry and developer community, possibly with

the pressure of 'ISO 9001' type certification available, W3C

has stuck to specifying technical output and been remarkably

successful in this process. The result is that many countries,

in adopting legal support for accessibility, have also relied

on the content specifications. Unfortunately, they have usually ignored the authoring tools and user agent specifications.

The WAI specifications are written in general terms in an attempt to be technology neutral and future-proof. Conformance

with general guidelines is not easily verified so the requirements

have to be reduced to specifics in each particular

case in order to be tested. The working groups who are responsible

for the generalisations support this process by producing

specific examples

in order to clarify what they mean by their generalisations but,

of course, these do not fit every situation and so are often

not relevant or helpful. In

general, the problem is that all these things are subject to

interpretation by people with more or less expertise and personal

bias. The working groups endeavour to write their recommendations

in unambiguous language but, of course, this is not always

possible. The result is that conformance is not an objective, absolute

quality.

Conformance with technical encoding formats and protocols is simpler. This is

a machine determinable state. But accessibility depends upon the formats

and protocols having been applied correctly, in the right context. This is a matter for human judgement. As the range of problems that users may

have is infinite, it cannot be expected that

the guidelines and associated re-defined formats and protocols

will cover every possibility for inaccessibility. There are

also many requirements that are not capable of such formal

definition.

Special resources for people with disabilities

Given the problems with accessibility, many developers have

tried to avoid the problem by offering a 'text-only' version

of their content. A major problem with this approach has been

that the pages often get 'out of synch', with text-only pages

not being updated with sufficient frequency. It also is only a solution for some of the problems of accessibility.

Many people with disabilities do not want to be treated

as such: they want to be able to participate in the world equally

with others so they want to know what others are being given

by a resource. They want an inclusive solution. They often prefer

the idea of a universal resource - a one size fits all solution

that includes them. The Chair of the British Standards Institution's

committee on Web Accessibility, Julie Howell (2008)

considers this issue and asks is it equality of service or

equality of Web sites that matters most.

The early synchronisation objection to the text-only alternative on the part

of the developers disappeared when site management was given

across to software systems that were capable of producing both

versions from a single authoring of content. This relies on

a shift from client software responsibility for the correct

rendering of the resource to the provision of appropriate components

by authoring/serving software. What are called 'dynamic' sites

respond to client requests by combining components in response

to user requests.

The motivation for accessibility often arises in a community

of users rather than creators. It is common to find a

third party creating an accessible version of a resource or

part of the content of a resource for a given community. The production of closed

captions for films is usually the activity of a third party,

as is the foreign language dubbing of the spoken sound tracks. ubAccess has

developed a service that transforms content for people with

dyslexia. A number of Braille translation services operate

in different countries to cater for the different Braille languages,

and online systems such as Babelfish help

with translation services.

The opportunity to work with third party augmentations and

conversions of content is realised by a shift from universal

design to flexible composition. Universal design

has the creator responsible for the various forms of the content

while flexible composition allows for distributed authoring.

The server, in the latter case, brings together the required

forms, determined by reference to a user's needs and preferences.

For flexible, distributed resource composition, metadata descriptions

of both the user's needs and preferences and the content pieces

available for construction of the resource are needed. The

Inclusive Learning Exchange [TILE]

demonstrates this. TILE uses metadata

profiles to match resources to user's needs, with the capability

to provide captions, transcripts, signage, different formats

and more to suit users' needs (Chapter 10).

Flexible composition satisfies the requirements for the users,

allows for more participation in the content production which

is a boon for developers, and demands more of server technology.

As noted elsewhere, this is suitable for increasing accessibility

but also has the benefit that it limits the transfer of content

that will not be of use to the recipient. This technique also

saves on requirements for client capabilities which is useful

as devices multiply and become smaller. Economically too, it

seems to be a better way to go (Jackson,

2004).

In summary, the history of the text-only page exemplifies the

trends in resource provision:

--------------------------------------------->

from static universal design to flexible dynamic composition

--------------------------------------------->

from client to server responsibility for resource rendering

---------------------------------------------->

from centralised authoring to distributed authoring

----------------------------------------------->

from code-cutting designers to applications-supported

designers

----------------------------------------------->

from creator-controlled content to user-demanded

content

Universal design

During most of the research period, the authoritative version

of the WCAG has been "Web Content Accessibility Guidelines 1.0,

W3C Recommendation 5-May-1999" [WCAG-1].

A new version has been under development for which the idea

of universal design is maintained. The role of WCAG is still

to support the developers as they choose what markup to use

(of course, many of them are oblivious of the choices and their

implications) and then to check that all is well.

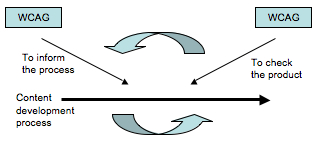

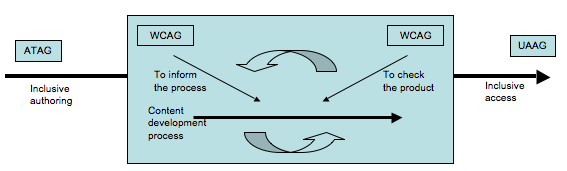

The role of the authoring tools and user agents guidelines

is to specify how to make and use accessible content (Figure 24). This includes access to the software applications involved in these processes (Figure 25).

Universal Accessibility - the W3C Approach

There is no sense in which one would want to 'fault' the work

of WAI in the area of

accessibility. Like others, they have struggled to deal with

an enormous and growing problem and everyone has contributed

all they can to help the cause. Nevertheless, it is clear that

the work of WAI alone cannot make the Web accessible. Although

there has been a lot written about the achievements of the

universal access approach, that is not the topic but rather

the context for the current work. The first, and most comprehensive work on accessibility of the Web was conducted by the UK Disabilities Rights Commission. It is, therefore, considered in detail in the next section.

The UK Disabilities Rights

Commission Report

On 27/3/03, the UK Disabilities Rights Commission [DRC]

issued a press release announcing its "First DRC Formal

Investigation to focus on web access". They planned to

investigate 1000 Web sites "for their ability to be accessed

by Britain’s 8.5 million disabled people". They

said that "A key aim of the investigation will be to identify

recurrent barriers to web access and to help site owners and

developers recognise and avoid them."

Significantly, this testing would not just be done by people

evaluating the Web sites against a set of specifications, but

they would also involve 50 disabled people in in-depth testing

of a representative sample of the sites, testing in their case

for practical usability. They claimed that, "This work

will help clarify the relationship between a site’s compliance

with standards and its practical usability for disabled people". (DRC,

2003)

On 30 April 2003, Accessify carried the following report of

the briefing for the DRC project:

.. it isn't a 'naming and shaming' exercise. What exactly

does it entail then? Well, the format is basically this -

1,000 web sites hosted in Great Britain are going to be tested

using automated testing tools such as Bobby and LIFT. From

that initial 1,000 a further 100 sites will undergo more

rigorous testing with the help of 50 people with a varying

range of disabilities, varying technical knowledge and all

kinds of assistive devices. ..

The aim is to go beyond the simple testing for accessibility

(although those original 1,000 sites will only have the automated

tests) - the notion put forward is "Accessibility for

Usability" ... which to these ears sounds like another

term for 'Universal Design' or 'Design For All'. I'm not

sure I appreciate the differences, if indeed there are any.

It's certainly true that getting a Bobby Level AAA pass does

not automatically make your site accessible, and it certainly

doesn't assure usability. The interesting thing about this

study, in my opinion, is how clear the correlation is between

sites that pass the automated Bobby tests and their actual

usability as determined by the testers. Will a site that

has passed the tests with flying colours be more usable?

I suspect that the answer will usually be yes. After all,

if you have taken time and effort to make a site accessible,

the chances are you have a good idea about the usability

aspect. We will see ... (Accessify,

2003a)

A year later, after the report was released, OUT-Law published

an article about it (2004):

City University London tested 1,000 UK-based sites on behalf

of the DRC... Its findings, released yesterday, confirmed

what many already suspected: very few sites are accessible

to the disabled – albeit an inaccessible site presents

a risk of legal action under the UK's Disability Discrimination

Act.

However, while the report did not "name and shame" the

808 sites that failed to reach a minimum standard of accessibility

in automated tests, City University has today revealed five "examples

of excellence" from its study:

- egg.com (Internet bank)

- oxfam.org.uk (charity)

- sisonline.org (spinal injuries voluntary organisation)

- copac.ac.uk (on-line catalogues of research libraries)

- whoohoo.co.uk (comedy dialect translator)

... Despite these examples of excellence, the overwhelming majority

of websites were difficult, and at times impossible, for

people with disabilities to access.

... In its automated tests, City University checked for technical

compliance with the World Wide Web Consortium (W3C) guidelines.

...

... while

1,000 sites underwent automated tests, City University put

100 of these sites to further testing by a disabled user

group.

That group identified 585 accessibility and usability problems;

but the DRC commented that 45 per cent of these were not

violations of any of the 65 checkpoints listed in the W3C's

Web Content Accessibility Guidelines, or WCAG.

The report was based on Version 1.0 of the WCAG – a

version which has been around since 1999. The W3C was keen

to point out that the WCAG is only one of three sets of accessibility

guidelines recognised as international standards, all prepared

under the auspices of the W3C's Web Accessibility Initiative.

...

The W3C explained that in fact its WAI package addresses

95 per cent of the problems highlighted by the DRC report.

However, both the W3C and the DRC are keen to point out that

they are working towards a common goal: to make websites

more accessible to the disabled. (Out-Law,

2004)

Judy Brewer, Director of W3C WAI, acknowledged the problems demonstrated by the DRC Report. She predicted that the forthcoming version of the content guidlelines would overcome some of them. She said the new version of the guidelines would be different in style.

Out-Law continued:

This change of style should help: another recent study,

by web-testing specialist SciVisum, found that 40 per cent

of a sample of more than 100 UK sites claiming to be accessible

do not meet the WAI checkpoints for which they claim compliance.

Brewer said this is not unusual: "We noticed that over-claiming

a site's accessibility by as much as a-level-and-a-half is

not uncommon." So Version 2.0 should be more precisely

testable.

The reason for the W3C statement on the DRC findings was,

said Brewer, to minimise the risk that the public might interpret

the findings as implying that they cannot rely on the guidelines.

City University's Professor Petrie told OUT-LAW: "Our

report strongly recommends using the WCAG guidelines supplemented

by user testing – which is a recommendation made by

W3C." She added that the University's data is "completely

at W3C's disposal" for its continuing work on WCAG Version

2.0.

Both the W3C and the DRC are keen to point out that developers

should follow the guidelines for site design – WCAG

Version 1.0 – but they should not follow these in isolation:

user testing, they both agree, is very, very important. (Out-Law,

2004)

Out-law's commentary is interesting because it takes a critical

position with respect to the report and its relationship and

comments on the W3C WCAG Version 1 and 2. Usability and the human-testing of content emerged as incredibly important to accessibility

(DRC, 2004b,

p. v). These comments will

be considered in more detail in following chapters.

DRC Report findings

The DRC

Report authors tend to use the term 'inclusive design'

rather than universal design. They comment that:

Despite the obligations created by the DDA, domestic research

suggests that compliance, let alone the achievement of best

practice on accessibility, has been rare. The Royal National

Institute of the Blind (RNIB) published a report in August

2000 on 17 websites, in which it concluded that the performance

of high street stores and banks was “extremely disappointing” [2000].

A separate report in September 2002 from the University of

Bath described the level of compliance by United Kingdom

universities with website industry guidance as “disappointing"

[Kelly,

2002]; and in November 2002, a report into 20 key “flagship” government

websites found that 75% were “in need of immediate

attention in one area or another” [Interactive

Bureau, 2002]. Recent audits of the UK’s most

popular airline and newspaper websites conducted by AbilityNet reported

that none reached Priority 1 level conformance and only one

had responded positively to a request to make a public commitment

to accessibility. (DRC,

2004b p. 4)

They further confirmed the lack of success in achieving accessibility

of Web sites by the introduction of the guidelines and the

local legislation. This time they were reporting on the state

in the UK:

It is the purpose of this report to describe the process

and results of that investigation, and to do so with particular

regard to the relationship between formal accessibility guidance

(such as that produced by the WAI) and the actual accessibility

and usability of a site as experienced by disabled users.

(DRC,

2004b, p. 5)

The overall finding includes the comment that compliance with

the WAI guidelines does not ensure accessibility. Finding 2

contains the sub-point 2.2:

Compliance with the Guidelines published by the Web Accessibility

Initiative is a necessary but not sufficient condition for

ensuring that sites are practically accessible and usable

by disabled people. As many as 45% of the problems experienced

by the user group were not a violation of any Checkpoint,

and would not have been detected without user testing. (DRC,

2004b, p. 12)

The report goes on to describe many things that could be done

by humans including training of Web content providers and Web

users, proactive efforts by people with front-line responsibility

such as librarians and more.

Finding 5 states:

Nearly half (45%) of the problems encountered by disabled

users when attempting to navigate websites cannot be attributed

to explicit violations of the Web Accessibility Initiative

Checkpoints. Although some of these arise from shortcomings

in the assistive technology used, most reflect the limitations

of the Checkpoints themselves as a comprehensive interpretation

of the intent of the Guidelines. (DRC,

2004b, p. 17)

The level of compliance with the guidelines was amazingly

low, even given the common perception that compliance levels

are not high:

- Of 1000 pages tested, 81% [failed] even the lowest level

of compliance as tested by automatic testing tools, which

can only detect some kinds of lack of compliance, so clearly

less that 19% would be even Level ! compliant.

- Of the 1000, only 6 pages passed the automated testing

part for level 1 and 2 indicating that less than 6 would

be Level 2 compliant. in fact, only 2 of the original 1000

passed this phase of testing when they were manually checked.

- No pages were found to be Level 3 compliant. (DRC,

2004b, pps 22,23)

In addition to the proportion of home pages that potentially

passed at each level of Guideline compliance, analyses were

also conducted to discover the numbers of Checkpoint violations

on home pages. Two measures were investigated. The first

was the number of different Checkpoints that were violated

on a home page. The second was the instances of violations

that occurred on a home page. For example, on a particular

home page there may be violations of two Checkpoints: failure

to provide ALT text for images (Checkpoint 1.1) and failure

to identify row and column headers in tables (Checkpoint

5.1). In this case, the number of Checkpoint violations is

two. However, if there are 10 images that lack ALT text and

three tables with a total of 22 headers, then the instances

of violations is 32. This example illustrates how violations

of a small number of Checkpoints can easily produce a large

number of instances of violations, a factor borne out by

the data. (DRC,

2004b, p. 23)

Analysis of the instances of Checkpoint violations revealed

approximately 108 points per page where a disabled user might

encounter a barrier to access. These violations range from

design features that make further use of the website impossible,

to those that only cause minor irritation. It should also

be noted that not all the potential barriers will affect

every user, as many relate to specific impairment groups,

and a particular user may not explore the entire page. Nonetheless,

over 100 violations of the Checkpoints per page show the

scale of the obstacles impeding disabled people’s use

of websites. (DRC,

2004b, p. 24)

The report contains many statistics about the speed with which

the users were able to complete tasks in what is generally

to be understood as usability testing. It showed, in the end,

that usable sites were usable and this, regardless of disability

needs.

On page 31, there is some explanation of the results:

The user evaluations revealed 585 accessibility and usability

problems. 55% of these problems related to Checkpoints, but

45% were not a violation of any Checkpoint and could therefore

have been present on any WAI-conformant site regardless of

rating. On the other hand, violations of just eight Checkpoints

accounted for as many as 82% of the reported problems that

were in fact covered by the Checkpoints, and 45% of the total

number of problems. (DRC,

2004b, p. 31)

After providing the details, the report continues:

Only three of these eight Checkpoints were Priority 1. The

remaining five Checkpoints, representing 63% of problems

accounted for by Checkpoint violations (or 34% of all problems),

were not classified by the Guidelines as Priority 1, and

so could have been encountered on any Priority 1-conformant

site.

Further expert inspection of 20 sites within the sample

confirmed the limitations of automatic testing tools. 69%

of the Checkpoint related problems (38% of all problems)

would not have been detected without manual checking of warnings,

yet 95% of warning reports checked revealed no actual Checkpoint

violation.

Since automatic checks alone do not predict users’ actual

performance and experience, and since the great majority

of problems that the users had when performing their tasks

could not be detected automatically, it is evident that automated

tests alone are insufficient to ensure that websites are

accessible and usable for disabled people. Clearly, it is

essential that designers also perform the manual checks suggested

by the tools. However, the evidence shows that, even if this

is undertaken diligently, many serious usability problems

are likely to go undetected.

This leads to the inescapable conclusion that many of the

problems encountered by users are of a nature that designers

alone cannot be expected to recognise and remedy. These problems

can only be resolved by including disabled users directly

in the design and evaluation of websites. (DRC,

2004b, p. 33)

The final statement here is most important. It is the main

thesis of the DRC Report that usability testing involving people

with disabilities is essential to the effective testing of content.

What is significant is that there is such a low rate

of universal or, as Petrie says, inclusive accessibility.

It confirms that there is a great

need for more to be done, and that it is unlikely to be done

by the original content creators.

In a sense, the Report places responsibility on the users:

Disabled people need better advice about the assistive technology

available so that they can make informed decisions about

what best meets their individual needs, and better training

in how to use the most suitable technology so they can get

the best out of it. (DRC,

2004b, p. 39)

While this is a possible conclusion, it is asserted that the

conclusion could equally have been that a better method of

ensuring user satisfaction should be developed. There is a

general emphasis on responsibility and training in many commentaries

on accessibility. Many examples of calls are for more training of creators,

for example, but perhaps

this responsibility is misplaced.

It is also interesting to note

that the Report advocates more trust of users to select

what they need and want (possibly represented by assistants).

If money is to be spent, the use of better authoring tools

may prove cheaper than the training being advocated. And if

users need to be served better, perhaps removing the need for

them to translate their own needs into assistive technologies

has somewhat more potential?

Responses to DRD Report

Petrie, the author of the DRD report, and others say:

Indeed, accessibility is often defined as conformance to

WCAG 1.0 (e.g. [HTML

Writers Guild]). However, the WAI’s definition of accessibility

makes it much closer to usability: content is accessible

when it may be used by someone with a disability [W3C.

Web Accessibility Initiative Glossary].

Therefore the appropriate test for where a Web site is accessible

is whether disabled people can use it, not whether it conforms

to WCAG or other guidelines. (Kelly

et al, 2005, p. 4)

They continue:

Thatcher [2004] expresses this nicely when he states that

accessibility is not “in” a Web site, it is experiential

and environmental, it depends on the interaction of the content

with the user agent, the assistive technology and the user.

(Kelly

et al, 2005, p. 4)

Kelly et al (2005)

argue that the DRD report and other evidence show that there

is not yet a good solution to the accessibility problem but

that it clearly does not rest simply in a set of technical authoring

guidelines. In fact, they list factors that need to be taken

into account in the determination of accessibility:

- The intended purpose of the Web site or resource (what

are the typical tasks that user groups might be expected

to perform when using the site? What is the intended user

experience?)

- The intended audience – their level of knowledge both of

the subject(s) addressed by the resource, and of Web browsing

and, assistive technology.

- The intended usage environment (e.g. can any assumptions

be made about the range of browsers and assistive technologies

that the target audience is likely to be using?)

- The role in overall delivery of services and information

(are there pre- existing non-Web means of delivering the

same services?)

- The intended lifecycle of resource (e.g. when will it be

upgraded/redesigned? Is it expected to be evolvable?) (Kelly

et al, 2005, p. 6)

They argue that priorities must be set for each context and

that

This process should create a framework for effective application

of the WCAG without fear that conformance with specific checkpoints

may be unachievable or inappropriate. (Kelly

et al, 2005, p. 7)

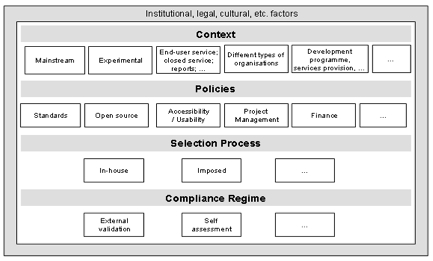

They provide an image of the wider context (Figure 26):

This framework offers one way of thinking about the problems.

But only a year later many of the same authors offered what

they call the 'tangram' approach (Chapter

5). It should

be noted that the proposed AccessForAll approach assumes an

operational framework that can include any and all of these

contextual issues.

Focusing on tools not products

When the problems with accessibility became clear, the W3C amended their HTML specifications to include some accessibility features [HTML 4.01]. But even better were the opportunities when EXtensible

MarkUp Language [XML] replaced HTML as a recommendation

from the Director of W3C. XML is a powerful computer language that does the HTML markup work using mostly same tags as the original HTML [XHTML], but is capable of doing a lot of other things as well. Despite the W3C Director's

recommendation that people should not continue to use HTML, it

is still used extensively.

It is the author's

opinion that in many organisations, authors are still producing inaccessible and non-compliant resources because they are using the wrong authoring tools. Good authoring tools, that is, tools that conform to the W3C Authoring Tools Accessibility Guidelines [ATAG], are both more likely to be accessible for use by people with disabilities and more likely to produce resources that are accessible. This would be done without their need to learn XML. Unfortunately,the Authoring Tools Accessibility

Guidelines have not been taken as seriously as the content

guidelines. They are not usually part of legal frameworks for accessibility and authors in general continue to use authoring tools that are non-conformant without querying them. The point

that is so often missed is that if authors use these tools,

instead of the many non-conforming tools, without needing to

know very much they can produce very accessible content 'unconsciously'.

The author believes this would make a much bigger difference

than has been the case with the approach of trying to make

all content developers accessibility-skilled using bad tools

and raw markup.

The fact is that HTML continues to be used

very often in its raw form and little has been achieved in the way of

increased accessibility of the Web.

Chapter Summary

It is an open question whether WCAG should

be the foundation of legislation for accessibility. This does

not detract from its role as a standard for developers, but

it suggests it is not a single-shop solution. Kelly (2008),

in particular, has been outspoken about this. In reporting on

the UKOLN organised Accessibility Summit II event

on A User-Focussed Approach to Web Accessibility, he said:

The participants at the meeting agreed on the need “to call

on the public sector to rethink policy and guidelines on accessibility

of the web to people with a disability“. As David Sloan, Research

Assistant at the School of Computing at the University of Dundee

and co-founder of the summit reported in a article published

in the E-Government Bulletin “the meeting unanimously agreed

the WCAG were inadequate“. (Kelly, 2008)

In the next chapter, other ways of approaching accessibility are considered.

Next -->

This work is licensed under a Creative Commons Attribution-Noncommercial-Share Alike 2.5 Australia License.

© 2008 Liddy Nevile

This work is licensed under a Creative Commons Attribution-Noncommercial-Share Alike 2.5 Australia License.

© 2008 Liddy Nevile